It is an idea that managed to reach a consensus among lobbyists, researchers, lawmakers, platforms and made its way to the Digital Services Act, the regulation that will be one backbone of how European democracy deals with the Internet.

Access to data for vetted researchers is article n31 of the Digital Services Act and it is a bad idea.

At first, sight it may sound good to put together « access »; « data » and « researchers » in the same regulation, and to secure it by adding « vetted ». But when looking in-depth the picture is more troubling. Let’s ask ourselves the following three questions: what kind of access, what is “data” and what is a “vetted researcher”?

What we really need is platform transparency, public access and whistleblowers (and the Facebook papers leaked by Frances Haugen to be made available for everyone).

Dura Lex Sed Lex

Officially called “Data Access and Scrutiny” the article 31 of the DSA considers that platforms would grant access to “data” for some “vetted researchers”

“for the sole purpose of conducting research that contributes to the identification and understanding of systemic risks”

What is a systemic risk one may ask? Systemic risks are defined in article 26 of the DSA and cover the following:

- Dissemination of illegal content.

- Negative effects for the exercise of the fundamental rights to respect for private and family life, freedom of expression and information, the prohibition of discrimination and the rights of the child.

- Intentional manipulation of their service, including by means of inauthentic use or automated exploitation of the service, with an actual or foreseeable negative effect on the protection of public health, minors, civic discourse, or actual or foreseeable effects related to electoral processes and public security.

In other words, some vetted researchers would be allowed to have access to platforms’ data for monitoring the systemic risks.

What Access?

The very first problem arises with GDPR, the regulation over personal data protection. GDPR is one of the reasons advanced by the Platforms for refusing to share data or even attacking researchers they dislike.

Another way to see it is that platforms could attack any researcher publishing something displeasing them on the ground of failing to secure or anonymise the data. Facebook is already using the “violating GDPR” accusation against researchers to shut down research projects they do not like. Algorithm Watch project monitoring Instagram was shut down facing suing threats from Facebook using that exact pretence.

Facebook also uses the “violating privacy” pretence to shut down another NYU project researching adds. In a public post made last august, Facebook details the playbook on how they played User’s privacy against the researchers access to data. This can also be the playbook for how Facebook is going to play the “data access for researchers” as long as the responsibility for securing, storing and anonymising these Data is charged upon the researcher.

Access to data means platforms will give access to raw data, and the researcher has to deal with the GDPR burden.

Researchers “shall commit and be in a capacity to preserve the specific data security and confidentiality requirements corresponding to each request.”

In other words, the legal obligations of the GDPR are the researcher’s responsibilities. Platforms grant access to (part) of their databases, and it is up to the researcher or academic institution to secure and anonymise the data if they want to use them.

It is creating a burden on the researcher and thus tight control over the research.

This particular aspect is currently negotiated through a Code of Practice that will create an exemption of GDPR for sharing data with researchers. There are three ways for negotiating this exemption:

- The Publication is exempted (which means researchers are exempted, and the platforms have the responsibilities to handle processed and GDPRised data to the researchers)

- The Sharing is exempted (which means Platforms are exempted from GDPR and researchers have the responsability if they want to use or publish data)

- An intermediate body will receive the data from the platform, process them and allow access to vetted researchers. (let’s all gain some time and say that intermediate body will be EDMO)

The first case is not likely to happen. It would simply allow the platform to use GDPR as an excuse for not sharing data and they will block and refuse any responsibilities, as they are doing now. The second case is more worrying, basically the end of OSINT.

The restriction to publishing that will come with the access to Data for Vetted researchers has implications on Open Source Intelligence. Open Source INTelligence, also known as OSINT now defines the gathering, research, analysis and use of data that can be openly accessed throughout the internet. The very first rule of OSINT, as indicated by the name, is Open. An OSINT proof is a proof because anyone can trace it back to the source. The reader has to be able to redo the same steps that led to this proof. Even if OSINT researchers are granted access to Data, as long as they can not disclose these data publicly, there is no OSINT. If they do disclose them, it will be a breach of the agreement between the platform and the vetted researcher. There can be no OSINT in access to data for vetted researchers, unless it is coupled with a public sharing of accessed data.

Meanwhile, the negotiation on the (non-binding, voluntary) code is more and more becoming a diversion from the DSA negotiations. And the “access to data for vetted researchers” more and more becomes a way for diverting from the real deal: public access to data and transparency of the algorithm: platforms in the DSA are allowed to amend the request if “giving access to the data will lead to significant vulnerabilities for the security of its service or the protection of confidential information, in particular trade secrets.”

In other words, they are allowed to refuse any request that could make their algorithm transparent or understandable. In exchange for “access to data for vetted researchers”, we agree that algorithms, as legitimate trade secrets, are off-limit for research. Article 57 specifies that in some cases “The Commission may also order that platform to provide access to, and explanations relating to, its databases and algorithms.” No mention of researchers involved there, but that access and the publication of some data would be a nice addition to democracy…

Ultimately when it comes to asking platforms access to their data a simple question disappears: do we even need access?

Every scientific field has created its own way of “accessing data”. From inventing the microscope to developing archaeology, devise an experiment… A scientist constitutes its own body of data and carefully selects what it needs for its research, defines the field, the protocol etc. Some researchers already think of ways of accessing Data without asking for the platform’s goodwill. And the good news is that it is possible through using GDPR and Transparency!

However, it requires a research question, to be situated in a particular (number of) discipline(s), to assess what data are needed and their scope in depth and width, how to recruit research subjects, define an interaction strategy with data controllers etc. This is all normal part of research and does not require negotiating access with the platforms.

What Data?

And there is a crucial question we somehow forget to ask: What are these “data” and how are they cooked?

Access to data concerns “Very Large Online Platforms” (or VLOPs). These are platforms “currently estimated to be amounting to more than 45 million recipients of the service. This threshold is proportionate to the risks brought by the reach of the platforms in the Union; where the Union’s population changes by a certain percentage, the Commission will adjust the number of recipients considered for the threshold, so that it consistently corresponds to 10 % of the Union’s population.”

The DSA also precise that “For the purposes of determining whether online platforms may be very large online platforms that are subject to certain additional obligations under this Regulation, the transparency reporting obligations for online platforms should include certain obligations relating to the publication and communication of information on the average monthly active recipients of the service in the Union.”

And here, right under your eyes, in the short time, it took you to read these sentence, a monthly active user just became a member of Union’s population. But twitter, in its Terms of Services, allows having multiple accounts. Facebook does not, but Instagram (belonging to “Meta” does. One may open half a dozen Gmail accounts with one same phone number, is the “monthly active user” based on the phone number or the account? How is established the location of the user? Through the user IP address or studying the user’s browser?

The point here is not to launch a debate over anonymity, right to multiple accounts or the rights of internet bots. In addition, there is a need for defining what VLOPs are and how responsibility differs if you are Facebook or Twitter and if you are OnlyFans or Patreon. But let’s just keep in mind we rarely really ask ourselves about manipulated metrics. And manipulating metrics is the backbone of Internet economy, how to get your post on top of newsfeed, on 1st page of google, buy likes, or repurpose K-Pop fan page to boost president Duterte popularity on Facebook.

We have become data junkies. We need data to feed the machine learning process, have audience statistics, measure the impact of specific messages or posts, and feed big studies of “online conversations” with nice colours “clusters”. And like junkies, we don’t want to know where this drug is coming from, how it moves from one point to another and how it is cut. We need data, and we want data.

How are the platform’s data compiled? No one knows. It is a secret algorithm that regularly changes and relies on a certain number of inauthentic or fake accounts. It is entirely different from one platform to another. Platforms will be more than happy to give access to data for vetted researchers. Platforms are data dealers. Literally, they are dealing data, and their entire economic model is based on dealing data. The junkie is asking the dealer for free doses, and the dealer loves to give a free sample to the most promising customers, so they get hooked on the product. Because giving access to data for academic researchers has a huge upside for the platforms.

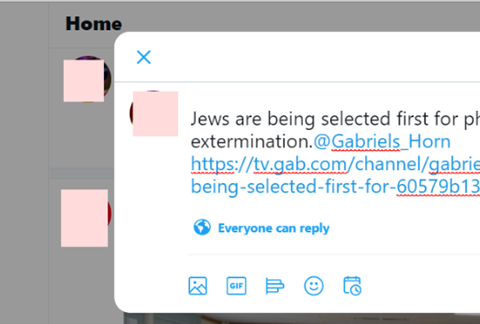

Let’s take a look at the most clever dealer in the area: Twitter. Twitter, through its API, has given access to data to researchers for a very long time. The results are incredible for Twitter: while Facebook is facing controversy, Twitter is left untouched. Studies of “online conversation” are flourishing, together with online tools for creating nice coloured “clusters”. All these studies are made with Twitter data. The coloured clusters of “interactions” are studying hashtags, retweets or “favs”, and the very particular dynamic of Twitter online conversations. In truth, Twitter metrics rely on hate speech as much as Facebook does and everyone knows it.

The tweet generating the most conversation and reaction will be put forward, and the best way to get a reaction is with controversy, anger, hate, emotional speech. Any way we turn it, unpopular opinion will continue to generate debate and discussion more than undebatable facts.

Still, no researcher with access to data will openly question the relevance of the Data. Why would anyone? Data do not lie, and the studies made over the Twitter data have to be scientific and neutral. Because the authors have to be scholars and experts. And this is how the data are cleaned: Access to data for vetted researchers means access to academic science for platform’s commercial data.

For the platforms, it is an open dream. Researchers giving academic access to vetted data. And what is a commercial product sold and dealt by the platform will gain prestige, credibility, make their way into PhD bibliography, the academic peer review process, sociologic statistics and scientific, mathematical formulas. And the “social network” becomes the “sociologic network”. Platforms are expert in monetising academic science. Google itself becomes the giant it is by applying academic quotation systems to its algorithm. Access to science for platforms’ data is an important gift and does not go without consequences.

What researchers?

Eventually, last but not least of the questions: Will the next Cambridge Analytica be a vetted researcher?

In order to be vetted, researchers shall be affiliated with academic institutions, be independent from commercial interests, have proven records of expertise in the fields related to the risks investigated or related research methodologies, and shall commit and be in a capacity to preserve the specific data security and confidentiality requirements corresponding to each request.

The fields are pretty extended as we see in article 26. The electoral process, public security, public health, intentional manipulation, meaning any expert in that field with access to Data will automatically become an academic researcher.

The risk here is to create a particular academic field specialised in accessing platforms data. Let’s face it, the academic field of disinformation is still new. Filled with uncertainty, methodological flaws, early hypothesis… And still, it is flooded with money and grants from think tanks, institutes or governments realising in 2016 with the election of Donald Trump that platform manipulation of democratic debate and online hate was an issue and rushing to fill the gap. These phenomena are not necessarily harmful and research need money. But it tends to attract experts in expertise, TV scholars or opinion book writers, who were yesterday in “conspiratorial narratives” and the day before in “terrorism and geopolitics”. Now they are looking at the green grass of “platform data”. Being granted access to Data will contribute to creating vetted researchers. With access to data, they would be allowed to bypass peer review and have an obvious advantage for publishing. The academic process for creating scholars is far from perfect but do we want to add platforms’ data to all the existing imperfections?

The other elephant in the room is: Who will be in charge of the vetting and what will be the process? Let’s phrase it differently: if a “Cambridge Analytica” institute applies for access to data, who will be in charge of refusing it and on what grounds? Are we going to turn down a “Moscow Center for Digital Democracy” even if it is affiliated with a russian academic institution? Does China have universities and people studying the internet? Are we planning to not vet them and to vet only western institutions?

Because some western academics, vetted by their institutions, have been known for spreading harmful disinformation. From Theodore Postol and the lies on Assad’s chemical weapons debunked by Bellingcat, to Professor Didier Raoult and the belief in Chloroquine as a miraculous cure for COVID, last years have been filled with instances of “vetted researchers (…) affiliated with academic institutions, (…) independent from commercial interests, [with] proven records of expertise in the fields related to the risks investigated or related research methodologies.”, as reads article 31.

The Working Group on Syria, Propaganda and Media was created out of trusted scholars to support conspiracies over Assad Chemical weapons and has again to be debunked by Bellingcat. This group of academics has a proven record of appetite for data and demonstrated its will to use it against Syrian anti-Assad activists.

The prestigious London School of Economics also happened to have ties with the Gaddafi regime

And Cambridge Analytica came out of Cambridge University…

Access to Data for Vetted Researchers is not a democratic move. Transparency is.

Frances Haugen is now one of the most famous whistleblowers from Facebook. She was touring around Europe and gave a testimony in front of MEPs during a public hearing titled “Whistleblower’s testimony on the negative impact of big tech companies’ products on users.”

She appears a strong proponent of algorithm transparency and public access to data. Several times she is drawn to the debate over “access to data for vetted researchers” and has to rephrase and recalibrate to put the idea of public access at the centre.

The first time she has to recalibrate from “gathering data” to “forcing platforms to disclose”

“Part of why regulators should be gathering that… have… euh (looking up) forcing platforms to disclose that data or forcing them to disclose their harms publicly. »

She insisted on « Having the ability for the public to see risk assessment, for consumers to have informed traces. «

She would like to see openness not restricted to vetted academic researchers:

« I strongly encourage you to not just give data to academics because the population of incredibly competent people who are experts in this space exist outside of academia and outside of government, and they will continue to do so. »

Eventually she advocated for experts to have access to platforms’ algorithm and public access to data:

« We need to build that ecosystem of people building the mussel to understand Facebook if we want to be safe. And actually, I would like to add one thing, I would love to do commentary, If I had Data, right? And I am not an academic, I probably won’t get accepted but you want to have people like me who have the expertise to see inside the companies that illustrates patterns with public data. »

Data that has been accessed by researchers has to become public at a point when studies are released. Researchers can not be held responsible for security and compliance with GDPR regarding that release. Access to data for vetted researchers has to be coupled with access to data used by researchers for the public.

How the data is made has to be transparent. If not, commercial data protected by private interest secrecy shall not become scientific data. Algorithmic transparency has to happen at a point and both researchers and the public need to understand how the data is compiled.

The vetting process has to be carefully thought and transparent. “Academic institutions” are not in itself a warranty that no data can be used in a way harmful to democracy. Neither can be the “media” category, as we know now what harm can happen with the media exemption. And the vetting process will have to deal with access to data for “vetted activists”, and this will demand an extremely transparent process the public can fully trust.

Researchers can (and should) create their way of gathering Data. We need protection for researchers, journalists or activists and their right to publish and share their conclusion publicly. Negotiating access to Data for vetted researchers is only a way to negotiate specific conditions under which this protection applies (for vetted researchers).

Access to data for researchers without these corrective patches is not a thing to wish for.